As artificial intelligence (AI) continues to evolve, so do the complex concepts and principles that support it. One such concept that has gained considerable attention in recent years is entropy. In simple terms, entropy is a measure of the disorder or randomness in a system. But what does this have to do with AI, and why is it important?

In the world of AI, entropy plays a critical role in understanding and optimizing machine learning algorithms. By measuring the uncertainty or randomness in data, AI systems can better identify patterns and make more accurate predictions. However, as with many technical terms in the field of AI, entropy can be difficult to grasp without a deeper understanding of the underlying principles. In this article, we’ll delve into the concept of entropy in AI, explore its applications, and discuss how it can be used to improve the effectiveness of machine learning systems.

What is Entropy in Artificial Intelligence?

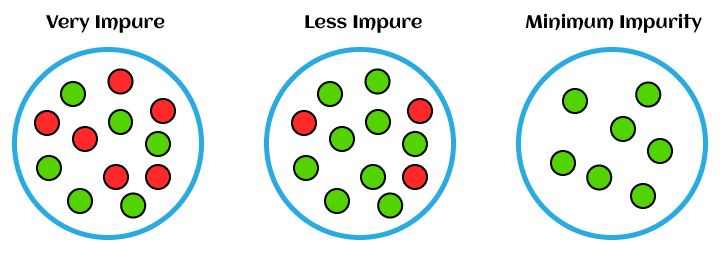

Entropy is an important concept in Artificial Intelligence (AI). It is a measure of uncertainty and is used to make decisions and guide the behavior of AI agents. Entropy is also used to measure the complexity of a system and can be used to measure the difficulty of solving a problem. Entropy can help an AI system make decisions, understand data, and improve its performance.

How Does Entropy Work in Artificial Intelligence?

Entropy is used to measure the uncertainty of a system or decision. It can be used to determine the probability of certain outcomes and is often used in decision-making algorithms. For example, an AI agent might use entropy to decide which move to make in a game, or which item to pick up in an environment.

What Is the Entropy Measurement?

The entropy measurement is a measure of uncertainty. It is calculated by taking the logarithm of the probability of an event occurring. The higher the entropy, the higher the uncertainty. This means that the AI system will have less information to make a decision.

How Is Entropy Used in AI?

Entropy is used in AI to assess the complexity of a problem or decision. For example, an AI agent might use entropy to decide which move to make in a game, or which item to pick up in an environment. Entropy can also be used to measure the difficulty of a problem or task. The higher the entropy, the more difficult the problem.

Entropy can also be used to assess the performance of an AI system. For example, an AI system may use entropy to measure how well it is performing on a task. The higher the entropy, the lower the performance.

What Are the Benefits of Entropy in AI?

The use of entropy in AI has many benefits. Entropy can be used to assess the complexity of problems and tasks, making it easier for AI agents to make better decisions. Entropy can also be used to measure the performance of an AI system, helping to identify weaknesses and areas for improvement. Finally, entropy can be used to measure the difficulty of a problem or task, making it easier to identify which tasks are more challenging and require more attention.

Frequently Asked Questions about Entropy in Artificial Intelligence

Entropy is an important concept in the field of artificial intelligence. It is used to measure the uncertainty of a system and can be used to make decisions in uncertain environments. In this article, we will explore what entropy is, how it is used in AI, and its implications.

What is Entropy in Artificial Intelligence?

Entropy is a measure of uncertainty in a system. It is used to measure how likely or unlikely a particular outcome is. In AI, entropy is used to quantify the level of uncertainty in a particular situation. For example, it can be used to measure the uncertainty of a decision tree or the uncertainty of a neural network.

Entropy is calculated using the Shannon entropy formula, which takes into account the probability of each outcome. The higher the entropy, the higher the uncertainty of the system. This means that the more uncertain the system, the higher the entropy.

How is Entropy Used in Artificial Intelligence?

Entropy is used in AI to make decisions in uncertain environments. For example, in decision trees, entropy is used to determine which branch to take. Entropy is also used in neural networks to measure the uncertainty of a prediction.

Entropy can also be used to measure the quality of a model. If a model has high entropy, it means that the model is uncertain. This means that the model is not reliable and should not be used. On the other hand, if a model has low entropy, it means that the model is more reliable and can be used with confidence.

What are the Implications of Entropy in Artificial Intelligence?

Entropy has implications for AI in two major ways. First, entropy is used to make decisions in uncertain environments. This means that AI systems can make better decisions in uncertain situations. Second, entropy can be used to measure the quality of a model. This means that AI systems can be evaluated and improved to make better predictions.

Overall, entropy is an important concept in AI. It is used to measure the uncertainty of a system, which can be used to make better decisions and evaluate models.

What are the Limitations of Entropy in Artificial Intelligence?

Entropy has some limitations in AI. First, it is an imperfect measure of uncertainty. This means that the entropy of a system may not accurately reflect the actual uncertainty of the system. Second, entropy does not take into account the complexity of the system. This means that it may not be able to accurately measure the uncertainty of a complex system.

Finally, entropy is a static measure of uncertainty. This means that it does not take into account changes over time. For example, if the uncertainty of a system changes over time, the entropy of the system may remain the same.

What are the Applications of Entropy in Artificial Intelligence?

Entropy has many applications in AI. It is used to make decisions in uncertain environments, such as decision trees and neural networks. It is also used to measure the quality of a model and to evaluate models. Entropy can also be used to measure the complexity of a system and to identify patterns in data.

Entropy is a powerful tool in AI and is used in many applications. It can be used to make better decisions in uncertain environments, to measure the quality of a model, and to identify patterns in data.

In conclusion, entropy in artificial intelligence is a critical concept that is used to measure the uncertainty or randomness of data. It plays a crucial role in decision-making processes for AI systems and helps to ensure that they make informed choices. Entropy is particularly useful in image and speech recognition, natural language processing, and machine learning algorithms.

As the field of artificial intelligence continues to evolve, entropy will undoubtedly become an increasingly important concept. It will help to improve the accuracy and reliability of AI systems, making them more effective in a wide range of applications. By understanding and utilizing the principles of entropy, we can create AI systems that are capable of learning, adapting, and making decisions in even the most complex and unpredictable environments.