As the world continues to rely more and more on technology, machine learning is proving to be a game-changer in various industries. From healthcare to finance, machine learning is transforming the way we approach problems and make decisions. However, behind the scenes of every successful machine learning model, there is a powerful hardware component that plays a vital role in the process – the GPU.

GPU, or Graphics Processing Unit, is a specialized type of processor that is designed to handle complex mathematical computations with ease. While GPUs were initially developed to handle graphics rendering for video games and movies, their exceptional processing power and parallel computing capabilities have made them an essential component in the field of machine learning. In this article, we’ll explore why GPUs are used for machine learning and how they have revolutionized the industry.

What is Machine Learning?

Machine learning is a branch of artificial intelligence that uses algorithms to learn from data and make predictions or decisions without being explicitly programmed. It enables computers to learn from past experiences and adapt to new data, allowing for data-driven decision making and autonomous problem-solving.

Why Are GPUs Used for Machine Learning?

GPUs (Graphics Processing Units) are specialized computer chips designed to render computer graphics and are increasingly being used for machine learning applications. GPU-accelerated computing provides a dramatic increase in processing power and performance over traditional CPU-only computing. GPUs offer several advantages, including parallel processing capabilities and powerful graphical processing units, which makes them ideal for machine learning applications.

Parallel Processing Capabilities

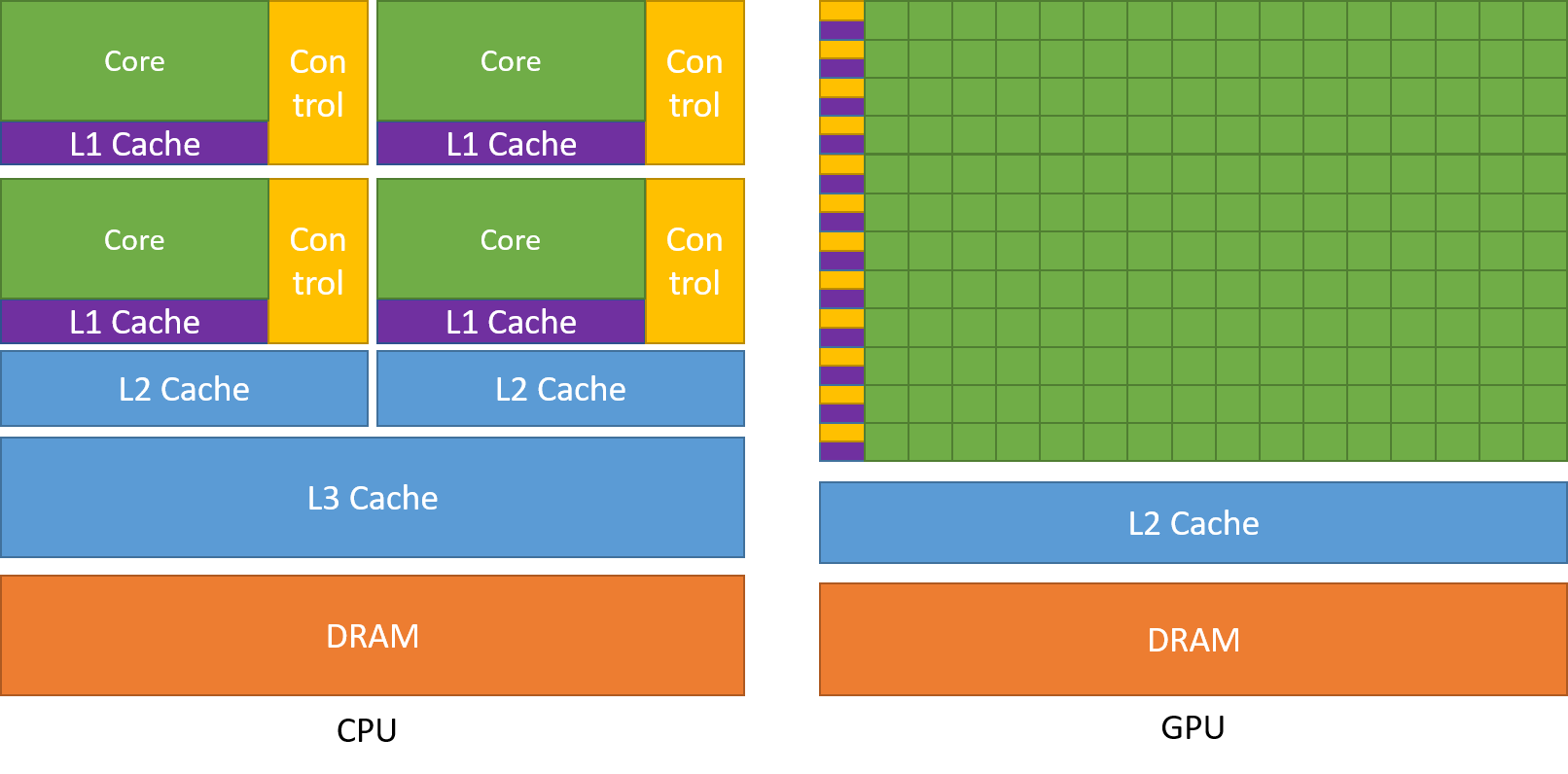

GPUs are able to process multiple tasks simultaneously, making them well-suited for the parallel processing capabilities required for machine learning applications. GPUs can process multiple instructions in parallel, allowing for faster and more efficient computations. This makes them well-suited for tasks such as training deep neural networks, which require extensive amounts of data to be processed in parallel.

Powerful Graphical Processing Units

GPUs are equipped with powerful graphical processing units (GPUs) that can handle large amounts of data faster and more efficiently than traditional CPUs. GPUs are designed with thousands of cores to process data in parallel, allowing for faster and more efficient computations. GPUs are well-suited for machine learning applications due to their ability to process large amounts of data quickly and efficiently.

Cost-Effectiveness

GPUs are relatively inexpensive compared to CPUs, making them cost-effective for machine learning applications. GPUs are also more energy-efficient than CPUs, making them ideal for large-scale computing tasks. GPUs can be used for both training and inference, making them a versatile and cost-effective option for machine learning applications.

Scalability

GPUs are able to scale to meet the demands of machine learning applications. GPUs are able to handle large amounts of data and can be scaled up to meet the demands of larger applications. GPUs can also be used to add computing resources to existing applications, allowing for faster and more efficient computations.

Flexibility

GPUs are highly flexible and can be used for a variety of applications. GPUs can be used for both training and inference, making them a versatile option for machine learning applications. GPUs can also be used for other computing tasks, such as image and video processing, making them a versatile computing resource.

Frequently Asked Questions

GPUs (graphics processing units) are specialized computer processors that are used to accelerate machine learning applications. They are used to speed up the training process of neural networks, as well as other machine learning algorithms.

What is a GPU?

A GPU is a specialized type of processor designed specifically for computer graphics applications. GPUs are designed to perform calculations in parallel, which means they can process multiple tasks simultaneously. This enables GPUs to process large datasets quickly, making them ideal for machine learning applications.

Why Are GPUs Used for Machine Learning?

GPUs are used for machine learning because they are able to process large amounts of data quickly and accurately. Neural networks, which are the backbone of many machine learning algorithms, require a large amount of data to be processed in order to train effectively. GPUs are able to process this data quickly and efficiently, allowing neural networks to train faster and more accurately.

What are the Benefits of Using GPUs for Machine Learning?

The main benefit of using GPUs for machine learning is the speed at which they can process data. GPUs can process data at a much faster rate than traditional CPUs, allowing machine learning algorithms to train faster and more accurately. This speed can significantly reduce the time it takes to train a machine learning model, allowing developers to build faster and more efficient applications.

Are GPUs the Only Option for Machine Learning?

No, GPUs are not the only option for machine learning. CPUs are still used for some applications, and there are other specialized hardware solutions such as FPGAs and TPUs (tensor processing units) that can be used as well. However, GPUs are the most widely used hardware solution for machine learning, due to their excellent performance and cost effectiveness.

How Do I Choose the Right GPU for Machine Learning?

Choosing the right GPU for machine learning depends on the application you are building. Generally speaking, you should look for a GPU with a high amount of memory and a high number of cores. You should also consider the type of machine learning you are doing, as some algorithms may require more powerful GPUs than others. Finally, you should take into account the cost of the GPU, as some models may be more expensive than others.

In conclusion, GPUs have become an integral part of machine learning due to their ability to process large amounts of data in parallel. As machine learning algorithms require a huge amount of data to be processed in order to make accurate predictions, GPUs provide the necessary computational power to handle these complex tasks. Moreover, GPUs have the ability to handle large matrix operations, which are a key component of many machine learning algorithms.

As machine learning continues to grow in importance across various industries, so does the demand for GPUs. With advancements in GPU technology, we can expect even faster and more efficient processing of data, leading to more accurate predictions and insights. Therefore, it is safe to say that GPUs will continue to play a crucial role in the development of machine learning, and we can expect to see further integration of these technologies in the future.