As the importance of AI continues to grow, with 98% of company leaders recognizing its strategic value, investments in AI are expected to increase significantly. By 2026, global AI spending is projected to reach $300 billion, accompanied by a tenfold rise in electricity usage for AI operations. This presents a challenge for businesses to harness AI’s potential while minimizing its environmental impact.

In the United States, the demand for AI technologies is driving a doubling of power consumption by data centers by 2030, reaching 35GW. This surge is primarily due to the deployment of AI-ready racks that consume 40kW to 60kW each, emphasizing the need for energy-efficient solutions.

To tackle these energy challenges effectively, three main strategies are recommended:

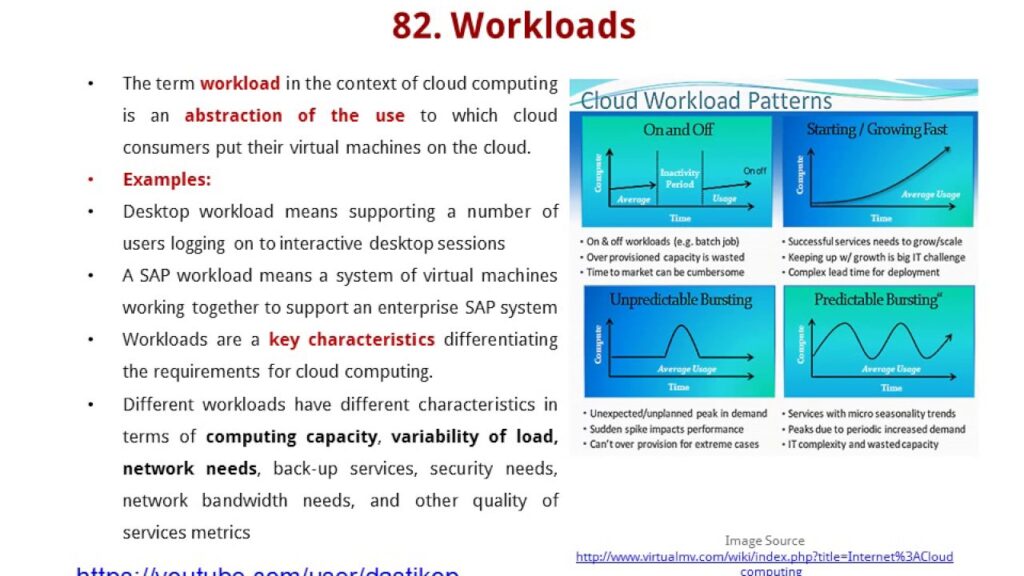

- Choosing the right computing resources for AI workloads, distinguishing between training and inference requirements.

- Optimizing performance and energy efficiency within existing data center infrastructures.

- Promoting sustainable AI development through collaborative efforts across the ecosystem.

CPUs vs. GPUs for AI Inference Workloads

Contrary to popular belief, CPUs are suitable for a majority of AI tasks, especially for inference workloads that do not require the high processing power of GPUs. CPUs offer a balanced mix of performance, energy efficiency, and cost-effectiveness, making them ideal for handling diverse and less-intensive tasks with optimal energy usage.

By leveraging software optimization libraries and cutting-edge tools, organizations can enhance AI performance on CPUs, streamlining deployment processes and reducing energy consumption. Model optimization further refines AI models, making them more compact and energy-efficient without compromising accuracy.

Choosing the Right Compute for AI Workloads

Matching CPU capabilities with specific AI priorities is crucial for maximizing AI benefits while maintaining energy efficiency. Enterprises should identify critical AI models, define performance requirements, evaluate specialized solutions, scale efficiently, right-size infrastructure, and consider future flexibility to ensure sustainable operations.

Energy-efficient CPUs offer a promising solution to counter the escalating electricity demands driven by AI expansion. Companies like Scaleway and Oracle are leading the way by implementing CPU-based AI inferencing methods, promoting sustainable practices and showcasing CPUs’ ability to handle demanding AI tasks efficiently.

Matching CPUs with Performance and Energy Needs

Integrating new CPU technologies into existing data centers requires careful consideration of factors like high utilization, AI-specific features, economic benefits, and simplicity of integration. By focusing on these aspects, data centers can balance performance and energy efficiency effectively, preparing for future AI demands.

Advancing CPU Technology for AI

Industry alliances, such as the AI Platform Alliance, play a vital role in advancing CPU technology for AI applications through collaborative efforts. These alliances focus on enhancing energy efficiency and performance, developing interoperable solutions optimized for various AI tasks to drive technological advancements.

Collaborative efforts in CPU technology development lead to significant energy savings and improved AI application performance, highlighting the benefits of industry-wide collaboration in driving innovation.

Jeff Wittich is chief product officer at Ampere Computing.

Generative AI Insights provides a venue for technology leaders to explore and discuss the challenges and opportunities of generative artificial intelligence. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Contact doug_dineley@foundryco.com.

Copyright © 2024 IDG Communications, Inc.