The EU AI Act is a groundbreaking legal framework that regulates the design, development, and use of AI systems within the European Union. Its main goals are to ensure the safe and ethical use of AI, protect fundamental rights, and promote innovation, especially in high-risk applications.

This legislation provides structure for companies relying on AI solutions, both within and outside the EU. It is a comprehensive approach to AI regulation and will impact businesses globally.

About us: Viso Suite offers a comprehensive platform for performing computer vision tasks in business settings. From people tracking to inventory management, Viso Suite helps address challenges across various industries. For more information on Viso Suite’s enterprise capabilities, schedule a demo with our team.

Understanding the EU AI Act: A Comprehensive Overview

The European Commission introduced a regulatory document in April 2021 to establish a unified legislative framework for AI regulation among its member states. After over three years of negotiations, the law was published on July 12, 2024, and took effect on August 1, 2024.

Here’s a brief summary of the act:

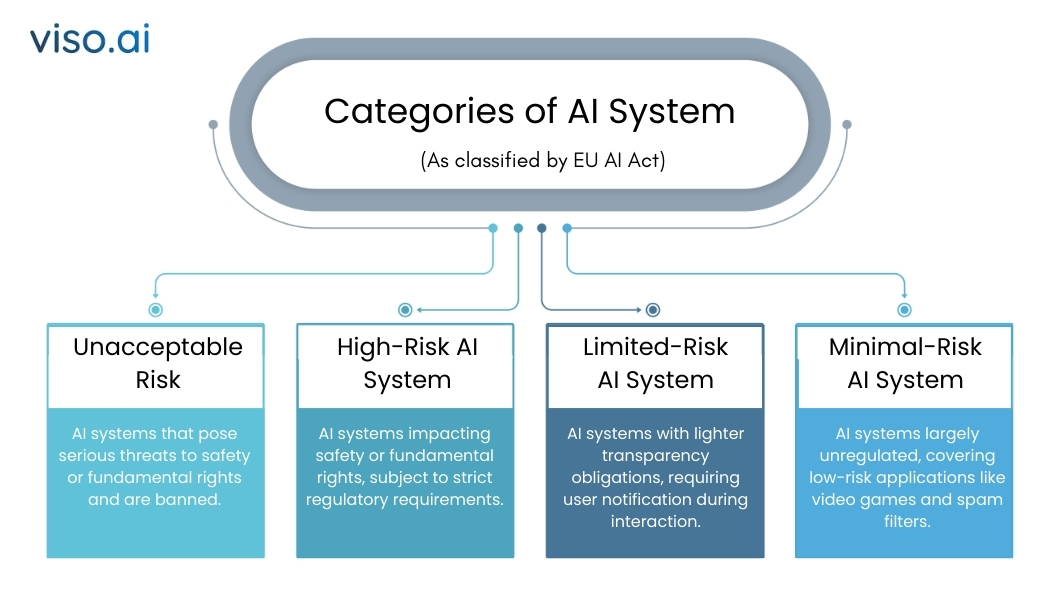

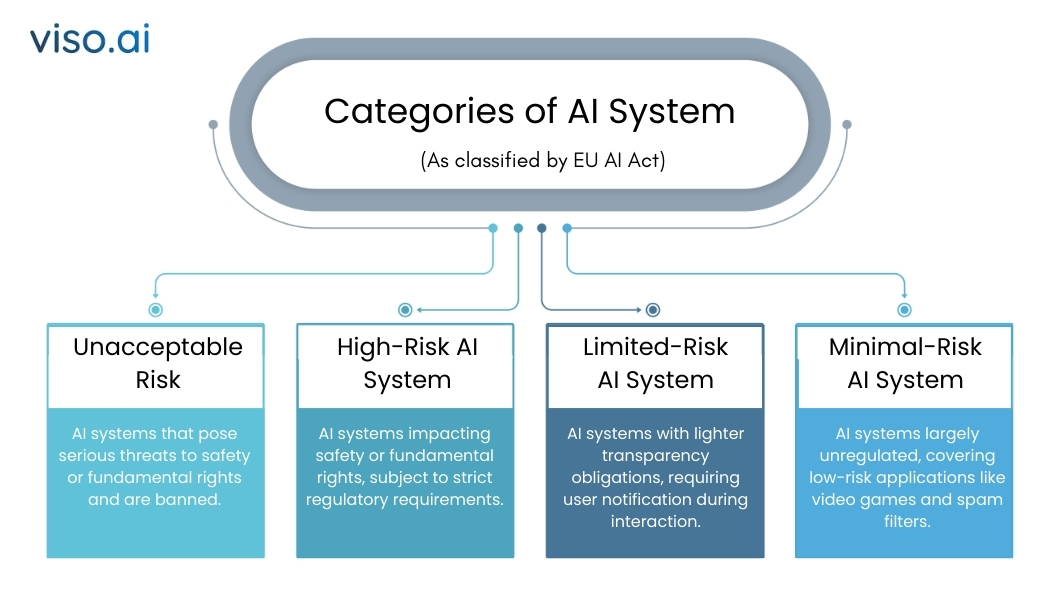

Risk-based Classification of AI Systems

The risk-based approach categorizes AI systems into four levels of risk:

Unacceptable Risk:

High-risk AI systems pose significant dangers to safety and fundamental rights, including those involving social scoring or manipulative practices.

High-Risk AI Systems:

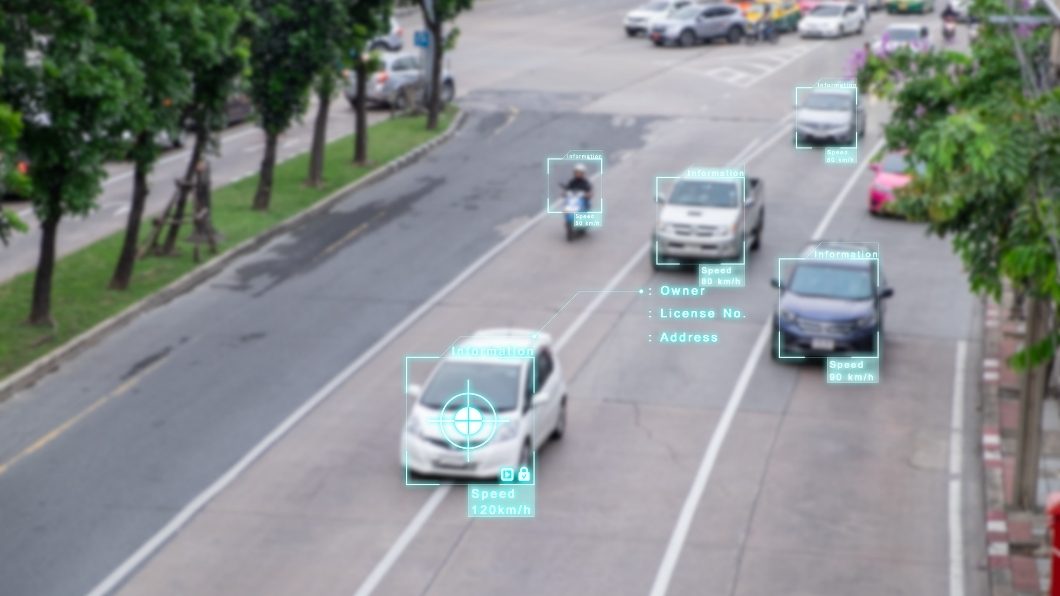

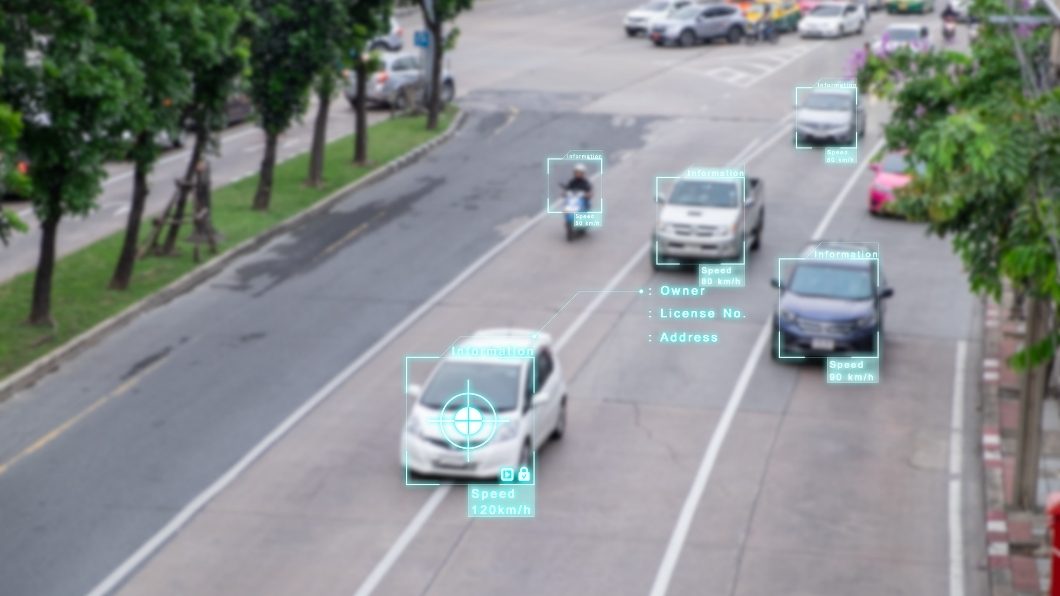

These systems have a direct impact on safety or fundamental rights, such as those in healthcare, law enforcement, or transportation. They are subject to stringent regulatory requirements, including thorough assessments, human oversight, and risk management.

Limited Risk:

Systems with limited risk require transparency to end-users about the presence of AI, such as chatbots or deepfakes.

Minimal Risk AI Systems:

Systems with minimal risk, like AI in video games or spam filters, are currently unregulated. However, as generative AI advances, regulatory changes may be implemented.

Obligations on Providers of High-Risk AI:

The compliance burden falls mostly on developers, whether inside or outside the EU, marketing or operating high-risk AI models within or into the EU.

Compliance also extends to high-risk AI systems from third countries used within the EU.

User’s Responsibilities (Deployers):

Users deploying AI systems in a professional context have less stringent obligations than developers but must ensure compliance when deploying high-risk AI systems.

These obligations apply to users in the EU and third countries.

General-Purpose AI (GPAI):

Developers of general-purpose AI models must provide technical documentation, follow copyright laws, and ensure their AI models do not pose systemic risks.

Free and open-license providers of GPAI must comply with copyright laws and disclose training data, unless their model poses systemic risks.

Regardless of licensing, GPAI models with systemic risks must undergo evaluation, testing, incident tracking, and cybersecurity practices.

Expectations for Companies

Companies using or developing AI technologies should prepare for changes in compliance, transparency, and operational oversight. Key expectations include:

High-Risk AI Control Requirements:

Companies deploying high-risk AI systems must document, test, and report rigorously. They need to conduct ongoing risk assessments, implement quality management systems, and ensure human oversight. Non-compliance could result in significant fines under the GDPR.

Transparency Requirements:

Companies must communicate clearly with users about the presence of AI systems, promoting user autonomy and compliance with EU principles of transparency and fairness. This extends to disclosing AI-generated or modified content, such as deepfakes.

Data Governance and AI Training Data:

AI systems must be trained, validated, and tested with diverse, unbiased datasets to ensure non-discriminatory results. This requires businesses to enhance data governance practices for fair AI outcomes.

Impact on Product Development and Innovation:

The Act introduces new testing and validation procedures for AI developers, potentially slowing down development. Companies that integrate compliance measures early will have a competitive edge. While initial regulation may hamper innovation, adaptable businesses will thrive in the EU market.

Key Guidelines for Compliance

Companies must follow these essential guidelines to comply with the EU AI Act:

Timeline for Enforcement

The Act proposes a phased enforcement schedule to allow organizations to adapt to new requirements:

- 2 August 2024: Official implementation date of the Act.

- 2 February 2025: Prohibition of AI systems categorized as “unacceptable risk.”

- 2 May 2025: Application of codes of conduct to guide developers in complying with the Act and EU principles.

- 2 August 2025: Enforcement of governance rules for General Purpose AI (GPAI) systems, emphasizing transparency and safety.

- 2 August 2026: Full implementation of GPAI commitments commences.

- 2 August 2027: Full application of requirements for high-risk AI systems, giving companies time to align with the regulations.

Post navigation

Post navigation