During QCon San Francisco 2024, Meta’s Ye (Charlotte) Qi shared insights on the intricacies of managing Large Language Models (LLMs) at scale.

As reported by InfoQ, Qi’s discussion delved into the challenges of handling massive models in practical settings, emphasizing the hurdles posed by their size, intricate hardware demands, and rigorous production environments.

She likened the current AI surge to an “AI Gold Rush,” where innovation is pursued fervently but obstacles abound. According to Qi, effectively deploying LLMs goes beyond simply fitting them onto existing hardware; it entails optimizing performance while managing costs efficiently. This, she stressed, necessitates close collaboration between infrastructure and model development teams.

Optimizing LLMs for Hardware

One major challenge with LLMs is their substantial resource requirements—many models surpass the capabilities of a single GPU. To address this, Meta utilizes strategies like distributing the model across multiple GPUs through tensor and pipeline parallelism. Qi underscored the importance of understanding hardware constraints, as mismatches between model design and available resources can impede performance significantly.

Her advice? Be strategic. “Don’t just grab your training runtime or your favourite framework,” she advised. “Find a runtime specialized for inference serving and delve deep into your AI problem to select the optimal optimizations.”

For applications reliant on real-time outputs, speed and responsiveness are crucial. Qi highlighted techniques such as continuous batching to maintain system efficiency and quantization, which enhances hardware utilization by reducing model precision. These adjustments, she noted, can notably boost performance.

Navigating Prototypes to Production

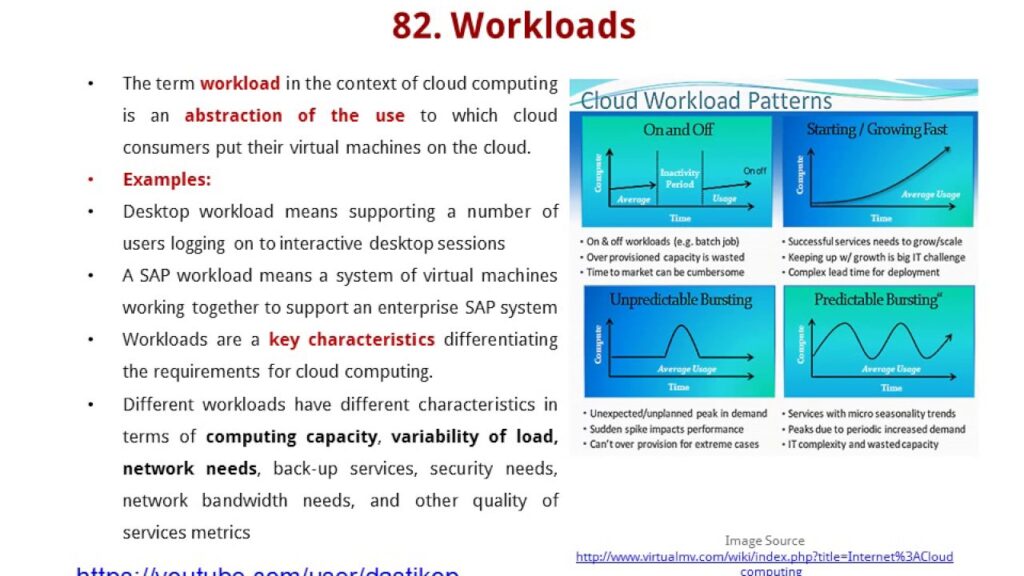

Transitioning an LLM from the lab to a production environment presents significant challenges. Real-world scenarios introduce unpredictable workloads and stringent speed and reliability requirements. Scaling involves a delicate balance of cost, reliability, and performance.

Meta tackles these challenges with approaches like disaggregated deployments, prioritized caching systems for frequently used data, and request scheduling to ensure efficiency. Qi highlighted the benefits of consistent hashing—a method that directs related requests to the same server—in enhancing cache performance.

Automation plays a pivotal role in managing complex systems. Meta relies on tools that monitor performance, optimize resource utilization, and streamline scaling decisions. Qi affirmed that Meta’s bespoke deployment solutions enable services to adapt to changing demands while controlling costs.

Embracing a Strategic Mindset

Scaling AI systems is more than a technical challenge for Qi; it is a mindset. She urged companies to adopt a holistic view to discern what truly matters. A strategic perspective helps businesses concentrate on efforts that deliver enduring value, continually refining systems.

Qi’s message was clear: success with LLMs demands more than technical expertise at the model and infrastructure levels—though these elements are crucial. It necessitates strategy, teamwork, and a focus on real-world impact.

(Photo by Unsplash)

See also: Samsung chief engages Meta, Amazon and Qualcomm in strategic tech talks

Interested in learning more about cybersecurity and cloud technologies from industry experts? Explore Cyber Security & Cloud Expo in Amsterdam, California, and London. Discover other upcoming enterprise technology events and webinars powered by TechForge here.