Convolutional neural networks (CNN) have transformed deep learning operations by their ability to analyze images, leading machines towards understanding visual data.

Their capacity to identify patterns, edges, and textures makes them indispensable for computer vision. CNNs power crucial AI innovations ranging from image recognition to self-driving cars.

Lets delve into their architecture, functionality, and applications in deep learning.

What is a Convolutional Neural Network?

Convolutional neural network is a specialized deep learning model designed to process grid-based data inputs such as images and videos. Unlike traditional fully connected neural networks, CNNs utilize a unique structure that mimics how the human brain processes visual information.

The basic building blocks of CNN networks consist of layers that utilize convolution to detect crucial image features.

With its capabilities, the network identifies image objects and their arrangement and surface structure without the need for human intervention to select features.

How CNN Works: Key Components

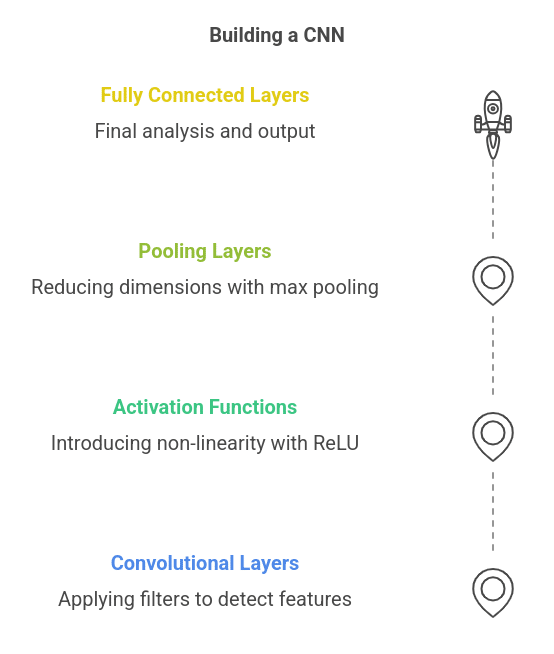

A convolutional neural network comprises multiple layers that collaborate to extract and analyze features from input data. Let’s dissect the essential components:

a) Convolutional Layers

The convolutional layer is the backbone of a CNN. It applies filters (kernels) to the input image, sliding across to identify specific features like edges, textures, and patterns. These filters assist the network in learning spatial hierarchies, with lower layers detecting simple patterns and deeper layers recognizing complex structures.

b) Activation Functions (ReLU)

Following convolution, the Rectified Linear Unit (ReLU) activation function introduces non-linearity into the network. As real-world data is highly complex, ReLU aids CNN in learning better representations by transforming negative values into zero and keeping positive values unchanged.

c) Pooling Layers

Pooling layers decrease the spatial dimensions of feature maps while retaining essential information. The most common method is max pooling, which selects the maximum value from a region of the feature map. This reduces computational complexity while enhancing the network’s robustness to minor variations in input images.

d) Fully Connected Layers

The network receives extracted features from all its convolutional and pooling layers, then sends them to the fully connected layer. This top layer processes the extracted information for advanced analysis and provides the final output.

Why Use CNN in Deep Learning?

The adoption of CNNs has revolutionized deep learning research as they can identify crucial image qualities without human guidance. CNNs are favored due to several key advantages such as:

- Efficient Feature Extraction – CNNs learn hierarchical patterns, making them highly effective for image-related tasks.

- Reduced Computational Complexity – Shared weights (filters) and pooling layers significantly reduce the number of parameters compared to traditional neural networks.

- High Accuracy in Image Recognition – CNNs are utilized in medical diagnostics systems, autonomous driving systems, and facial recognition technology.

Applications of Convolutional Neural Networks

CNN architectures serve as a primary deep learning method that excels in detecting images and patterns during computational processing, especially in tasks involving image and pattern recognition. Here are some key areas where CNNs shine:

a) Image Recognition

Convolutional neural networks (CNNs) are widely used in image recognition applications. Platforms like Google Photos and Facebook leverage CNNs to identify and categorize images based on objects, faces, and scenes. CNNs can differentiate between thousands of categories with high accuracy.

b) Object Detection

CNNs form the backbone of object detection systems utilized in security surveillance, self-driving cars, and facial recognition technologies. Models like YOLO (You Only Look Once) and Faster R-CNN employ convolutional layers to detect and classify multiple objects in real-time.

Learn about object detection using TensorFlow and enhance your machine learning skills with this detailed guide.

c) Medical Imaging

In the healthcare sector, CNNs assist healthcare professionals in making disease diagnoses through medical imaging. CNN-based medical models conduct X-ray, MRI, and CT scan analyses to identify medical anomalies such as tumors, infections, and fractures more effectively than traditional methods.

d) Natural Language Processing (NLP)

While CNNs are primarily utilized for image-related tasks, they are also applied in NLP tasks like text classification and sentiment analysis. By treating text as a 2D structure (word embeddings), CNNs can recognize key phrases and context in a document.

Explore the realm of Natural Language Processing and enhance your expertise with this comprehensive Guide.

How CNN Differs from Traditional Neural Networks

Traditional artificial neural networks (ANNs) rely on fully connected layers, where every neuron is connected to all others in the next layer. This results in a large number of parameters and computational inefficiency, especially for high-dimensional data like images.

CNNs, on the other hand:

- Utilize convolutional layers to detect spatial hierarchies and patterns.

- Have local connectivity (not every neuron is connected to all others).

- Share weights (filters), reducing the number of parameters significantly.

- Employ pooling layers to reduce dimensionality and enhance efficiency.

Due to these advantages, CNNs outperform ANNs in image processing tasks.

Learn more about different types of neural networks.

Challenges and Limitations of CNNs

Despite their benefits, CNNs present specific challenges:

- High Computational Cost – Training deep CNN models demands significant processing power and memory, often relying on GPUs.

- Need for Large Datasets – CNNs perform best with large, labeled datasets. Small datasets can lead to overfitting.

- Lack of Interpretability – CNNs operate as “black boxes,” making it challenging to understand how they arrive at specific decisions.

- Vulnerability to Adversarial Attacks – Minor changes in input images can misguide CNNs, resulting in incorrect predictions.

Researchers are continuously enhancing CNN innovations to overcome these challenges.

Learn more about deep learning.

Future of CNNs in AI and Deep Learning

The future of CNN in deep learning appears promising, with advancements in:

- Capsule Networks – A potential enhancement over CNNs that preserves spatial hierarchies more efficiently.

- Efficient Architectures – Models like MobileNet and EfficientNet optimize CNNs for mobile and edge computing.

- Integration with Transformers – Merging CNNs with transformer models is enhancing vision-based AI applications.

Future advancements in image recognition, healthcare, robotics, and other fields will rely on progress in CNNs.

Conclusion

The convolutional neural network has revolutionized how deep learning handles visual data by making machines adept at accurately recognizing images. Computers utilize CNNs as a vital tool to advance AI technology applications.

CNNs are continuously evolving to construct more efficient and intelligent AI systems. Research advancements will incorporate CNN technology into new deep learning systems to achieve superior outcomes.

Related Free Courses:

Frequently Asked Questions(FAQ’s)

1. How do CNNs handle different image sizes?

CNNs can process images of varying sizes by utilizing adaptive architectures like global pooling layers or resizing images before inputting them into the network. Many CNN models require a fixed input size, so images are often preprocessed using padding or cropping.

2. Can CNNs be used for non-image data?

Yes! While CNNs are primarily utilized for image processing, they can also be applied to tasks like speech recognition, time-series analysis, and natural language processing (NLP) by treating sequential data as 2D representations (e.g., spectrograms for audio or word embeddings for text).

3. What are pre-trained CNN models, and why are they useful?

Pre-trained CNN models like VGG16, ResNet, and MobileNet are trained on large datasets (e.g., ImageNet) and can be fine-tuned for specific tasks. They help accelerate training and enhance accuracy, especially when labeled data is limited.

4. How do CNNs compare to Vision Transformers (ViTs)?

CNNs excel at learning local patterns through convolutional layers, while Vision Transformers (ViTs) focus on global attention mechanisms to capture long-range dependencies. ViTs are gaining traction for tasks where understanding relationships between distant pixels is crucial.