DeepSeek Janus-Pro is an innovative multimodal AI model developed by DeepSeek to combine visual and language processing capabilities in one architecture. It is open-source and designed for local use to ensure privacy, control, and faster response times without relying on cloud-based solutions.

The model utilizes the SigLIP-L Vision Encoder, allowing for tasks such as image generation from text prompts and comprehensive image understanding. In this guide, we will take you through the step-by-step process of setting up and using DeepSeek Janus-Pro on your machine, covering installation, configuration, and best practices to maximize its potential.

What is DeepSeek Janus-Series?

The DeepSeek Janus-Series consists of advanced multimodal AI models designed for processing and generating both textual and visual data seamlessly. Each model in the series builds upon the previous one, introducing enhancements in efficiency, accuracy, and image generation quality. The three models in the series are:

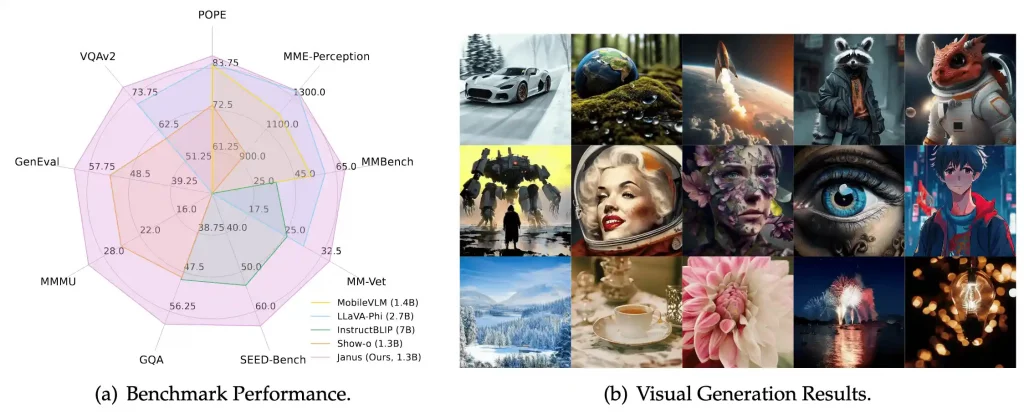

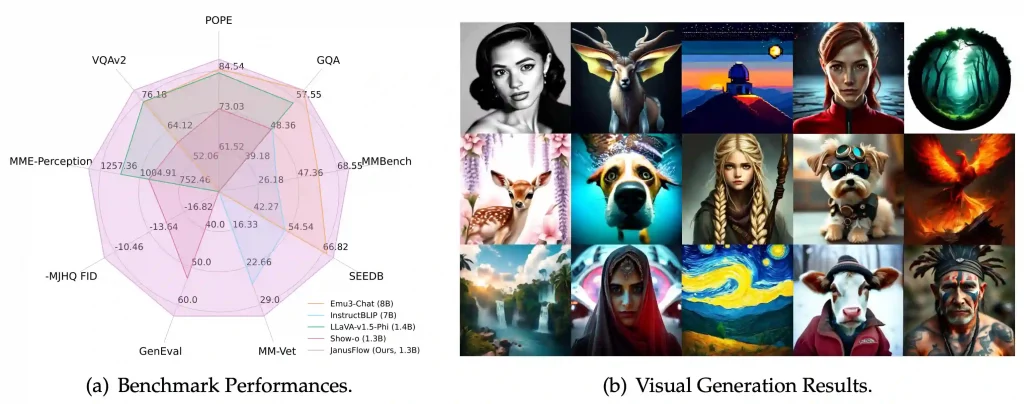

1. Janus

Janus serves as the foundation of the series with a unified transformer architecture that can handle both language and vision-based tasks effectively. It utilizes an autoregressive framework, making it suitable for tasks like image captioning, text-based image retrieval, and multimodal reasoning.

2. JanusFlow

JanusFlow enhances Janus by introducing flow-based rectification techniques, improving its image generation capabilities. The model is optimized for stability and high-quality rendering, making it a strong competitor to existing text-to-image models.

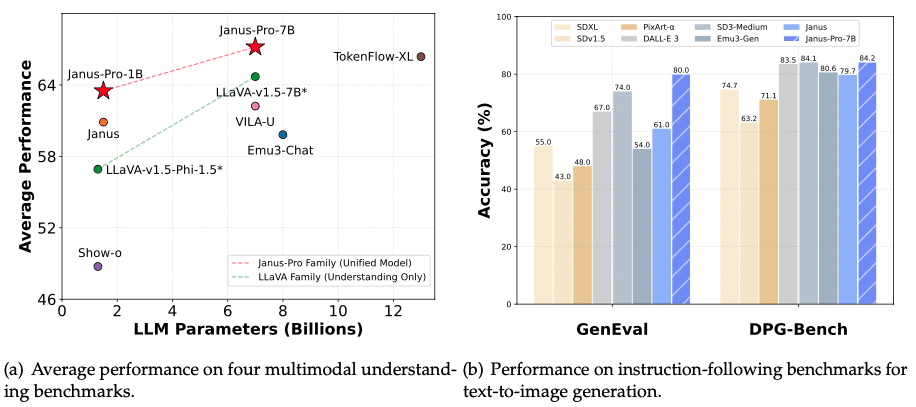

3. Janus-Pro

Janus-Pro, the most advanced model in the series, is designed for high-performance multimodal AI applications. It offers expanded training datasets, optimized inference efficiency, and superior image generation capabilities, often outperforming models like DALL-E 3 and Stable Diffusion in benchmark tests.

Step-by-Step Installation Guide for DeepSeek Janus-Pro

1. System Requirements

Before proceeding with the installation, ensure your system meets the following requirements:

Hardware Requirements:

- GPU: NVIDIA GPU with at least 16GB VRAM (e.g., RTX 3090, RTX 4090) for optimal performance.

- RAM: Minimum 16GB (32GB recommended).

- Storage: At least 20GB of free space for model weights and dependencies.

- CPU: A modern multi-core processor (Intel i7/AMD Ryzen 7 or higher recommended).

Software Requirements:

- Operating System: Windows 10/11 (64-bit).

- Python: Version 3.8 or later (recommended 3.10+).

- CUDA Toolkit: For GPU acceleration (ensure compatibility with GPU drivers).

- Microsoft Visual C++ Build Tools: Required for compiling certain Python packages.

2. Install Essential Software and Dependencies

Step 1: Install Python

- Download Python 3.10+ from the official website.

- During installation, ensure to check the box “Add Python to PATH” before proceeding with the installation.

Verify the installation by running the following command:

Step 2: Install CUDA Toolkit (for NVIDIA GPUs)

- Download the CUDA Toolkit from NVIDIA’s website.

- Install the CUDA Toolkit and ensure it matches your GPU driver version.

Step 3: Install Microsoft Visual C++ Build Tools

3. Set Up Virtual Environment

To avoid conflicts with other Python projects, it is recommended to create a virtual environment.

– Open Command Prompt and navigate to your desired project directory:

– Create a virtual environment:

– Activate the virtual environment:

janus_env\Scripts\activate

(You’ll see (janus_env) appear before the command line, indicating it’s activated.)

4. Install Required Python Packages

– Upgrade pip first:

pip install --upgrade pip

Now, install the required dependencies:

– Install PyTorch with CUDA support (for GPU acceleration):

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

(Replace cu118 with your CUDA version, e.g., cu121 for CUDA 12.1.)

– Install the Hugging Face Transformers library:

– (Optional) Install SentencePiece and other tools for tokenization:

pip install sentencepiece accelerate

5. Download and Load DeepSeek Janus-Pro 7B Model

We will use Hugging Face Transformers to download and load the model:

– Create a Python script (e.g., download_model.py) and add the following code:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "deepseek-ai/Janus-Pro-7B"

# Load tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

print("Model and tokenizer downloaded successfully!")

– Run the script to download the model:

This will automatically download the Janus-Pro 7B model to your local machine.

6. Running DeepSeek Janus-Pro 7B Locally

Now, let’s test the model by generating a response to a prompt:

– Create another Python script (e.g., run_janus.py) and add:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "deepseek-ai/Janus-Pro-7B"

# Load tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Input prompt

input_text = "Describe a futuristic city with AI-driven infrastructure."

inputs = tokenizer(input_text, return_tensors="pt")

# Generate response

outputs = model.generate(**inputs, max_length=100)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print("AI Response:", response)

Run the script:

The model will process the input and generate an AI-generated response based on DeepSeek Janus-Pro’s capabilities.

Example: Enhancing Image Descriptions with DeepSeek Janus-Pro

Let’s refine a caption using DeepSeek Janus-Pro 7B for a more detailed and engaging description:

Step 1: Install and Load Janus-Pro

Step 2: Generate an Enhanced Description

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load DeepSeek Janus-Pro 7B

model_name = "deepseek-ai/Janus-Pro-7B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Generate an enhanced description

input_text = f"Improve this image description: '{caption}'. Make it more engaging and detailed."

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs, max_length=150)

enhanced_caption = tokenizer.decode(outputs[0], skip_special_tokens=True)

print("Enhanced Caption:", enhanced_caption)

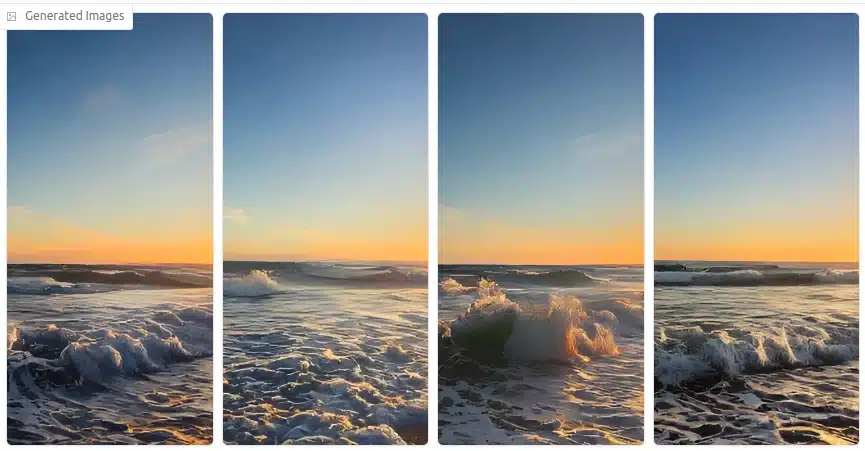

Example Output

Input Image

BLIP-Generated Caption

“A beautiful sunset over the ocean with waves crashing on the shore.”

DeepSeek Janus-Pro Enhanced Caption

The sun sets in a mesmerizing blend of orange, pink, and purple hues, reflecting off the calm ocean waves as they gently kiss the golden shore. A silhouette of a distant sailboat adds a touch of adventure to the serene scene.

Optimizing Performance in DeepSeek Janus-Pro 7B

DeepSeek Janus-Pro 7B is a powerful model, but optimizing it for faster inference, lower memory usage, and better response quality can significantly improve its usability. Below are key strategies to achieve this:

1. Speeding Up Inference with GPU Acceleration

Using a GPU (NVIDIA CUDA-enabled) can drastically improve inference speed compared to CPU execution.

– Enable GPU Support (Using PyTorch & CUDA)

First, ensure PyTorch detects your GPU:

import torch

print("GPU Available:", torch.cuda.is_available())

print("GPU Name:", torch.cuda.get_device_name(0) if torch.cuda.is_available() else "None")

If running on CPU, switch to GPU:

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

– Use Flash Attention for Faster Inference

Flash Attention optimizes memory usage for large models. Install it via:

Then, enable it when loading the model:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "deepseek-ai/Janus-Pro-7B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, attn_implementation="flash_attention_2").to("cuda")

2. Reducing Memory Consumption (Using Quantization)

Quantization reduces the model’s memory footprint by converting weights from FP32 to INT8/4-bit precision, making it easier to run on consumer GPUs.

– Install BitsandBytes for 4-bit & 8-bit Quantization

– Load Janus-Pro with 4-bit Quantization

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

quant_config = BitsAndBytesConfig(load_in_4bit=True)

model = AutoModelForCausalLM.from_pretrained(model_name, quantization_config=quant_config).to("cuda")

Benefits of Quantization:

- Reduces VRAM usage from 30GB+ to 8GB-12GB (runs on RTX 3090/4090).

- Enables inference on mid-range GPUs like RTX 3060 (12GB).

Adjusting Parameters for Better Response Generation

Tuning parameters can improve response quality, balancing between creativity, coherence, and accuracy.

1. Adjust Temperature & Top-k Sampling

- Temperature (0.2–1.0): Lower values for more factual responses, higher for creative.

- Top-k Sampling (Top 40-100): Limits vocabulary choices to reduce randomness.

input_text = "Explain quantum computing in simple terms."

inputs = tokenizer(input_text, return_tensors="pt").to("cuda")

output = model.generate(**inputs, max_length=300, temperature=0.7, top_k=50,