Edited & Reviewed By-

Dr. Davood Wadi

(Faculty, University Canada West)

Artificial intelligence in today’s dynamic world relies heavily on Large Language Models (LLMs) to generate human-like text and perform various tasks. However, these models often face challenges such as hallucinations, where they generate fake or nonsensical information due to a lack of contextual knowledge.

One promising solution to address the issue of hallucinations in artificial models is Retrieval Augmented Generation (RAG). RAG utilizes external knowledge sources to generate accurate and contextually appropriate responses by combining different information sources.

This article delves into the key concepts discussed in a recent masterclass on Retrieval Augmented Generation (RAG), offering insights into its implementation, evaluation, and deployment.

Understanding Retrieval Augmented Generation (RAG)

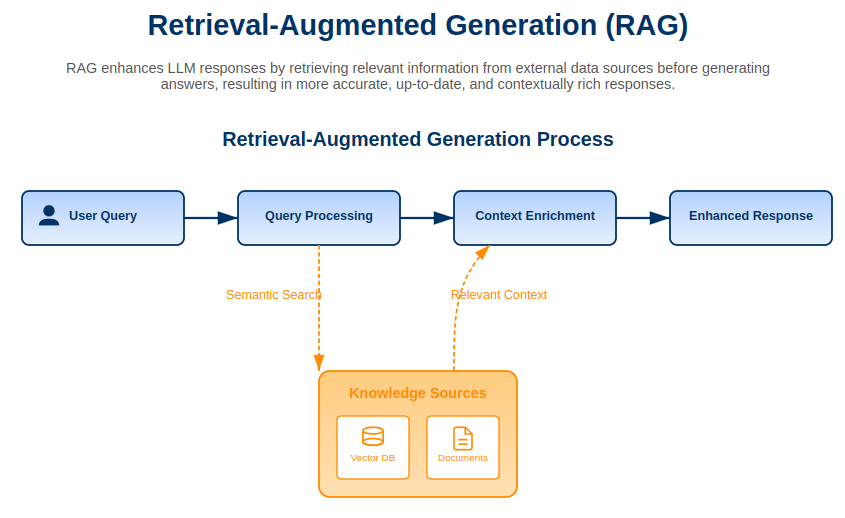

RAG is an innovative approach to enhance LLM functionality by accessing relevant contextual information from a designated knowledge database. By fetching real-time documents, RAG ensures that responses are based on reliable knowledge sources rather than pre-trained systems.

Why RAG?

- Reduces hallucinations: By limiting responses to information retrieved from documents, RAG improves reliability.

- More cost-effective than fine-tuning: RAG dynamically leverages external data without the need to retrain large models.

- Enhances transparency: Users can trace responses back to the source documents, increasing trustworthiness.

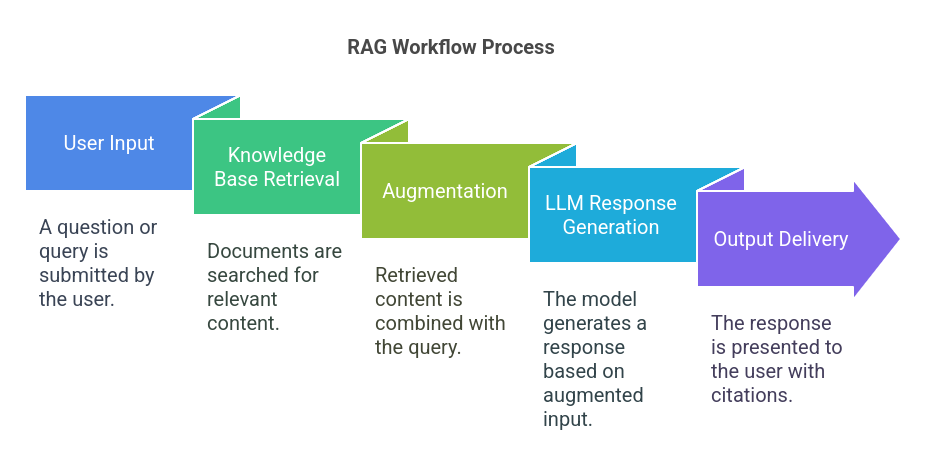

RAG Workflow: How It Works

- User Input: A question or query is submitted.

- Knowledge Base Retrieval: Relevant documents are searched for in various formats like PDFs, text files, and web pages.

- Augmentation: Retrieved content is combined with the query before being processed by the LLM.

- LLM Response Generation: The model generates a response based on the augmented input.

- Output Delivery: The response is presented to the user, ideally with citations to the retrieved documents.