DeepSeek R1 is an advanced AI language model that offers enhanced privacy, speed, and customization when run locally. Using Ollama, a lightweight AI model manager, you can easily install and run DeepSeek R1 on your system.

This guide covers the following:

- Installing Ollama on macOS, Windows, and Linux

- Downloading and running DeepSeek R1 locally

- Interacting with the model using simple commands

By the end of this guide, you’ll be proficient in setting up and utilizing DeepSeek R1 efficiently on your local machine.

What is DeepSeek R1?

DeepSeek R1 is an open-source AI model tailored for natural language processing (NLP), chatbots, and text generation. It serves as an on-premise solution, offering an alternative to cloud-based AI models like ChatGPT and Gemini, enabling users to process data locally.

Explore the key features, use cases, and applications of DeepSeek R1 in AI and machine learning.

Why Run DeepSeek R1 Locally?

| Benefit | Description |

|---|---|

| Privacy | Secures data by avoiding external server queries |

| Speed | Provides faster response times without cloud server reliance |

| Customization | Allows fine-tuning for specific tasks or workflows |

| Offline Access | Works independently of an internet connection post-installation |

To run DeepSeek R1 locally, begin by installing Ollama, which serves as a lightweight AI model runtime.

What is Ollama?

Ollama is an AI model management tool simplifying the execution of large language models locally. It offers:

- Easy installation and setup – No complex configurations needed

- Efficient model execution – Optimized for consumer hardware

- Offline capabilities – Models can run without an internet connection once downloaded

Ollama acts as a lightweight AI model runtime, enabling users to pull, serve, and interact with AI models like DeepSeek R1 on their local machines.

Installing Ollama:

Follow the steps to install Ollama on your system:

For macOS: Open Terminal and run:

If the Homebrew package manager isn’t installed, visit brew.sh and follow the setup instructions.

For Windows & Linux:

- Download Ollama from the official Ollama website.

- Follow the installation guide for your operating system.

Alternatively, Linux users can install it via Terminal:

curl -fsSL https://ollama.com/install.sh | sh

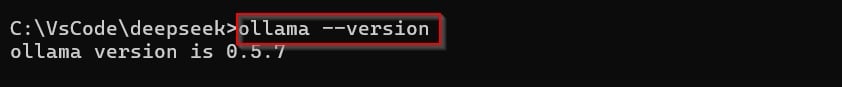

Run this command to verify the installation of Ollama.

Once Ollama is successfully installed, proceed with setting up DeepSeek R1.

Steps to Run DeepSeek R1 Locally on Ollama

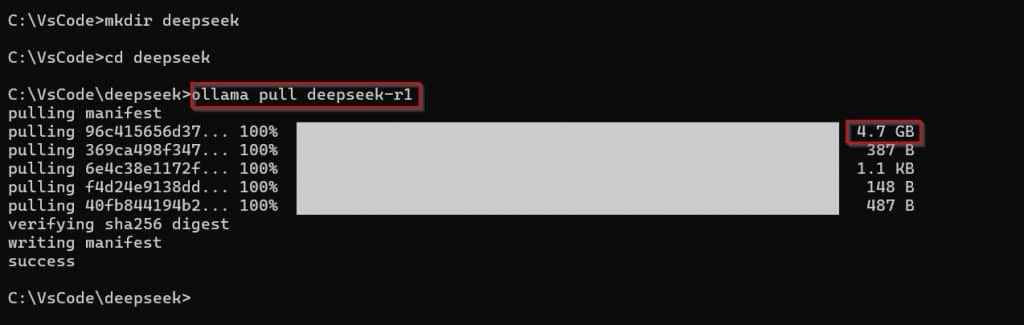

Step 1: Download the DeepSeek R1 Model

To start using DeepSeek R1, download the model by running:

For a smaller version, specify the model size:

ollama pull deepseek-r1:1.5b

After downloading, you’re all set to utilize DeepSeek R1.

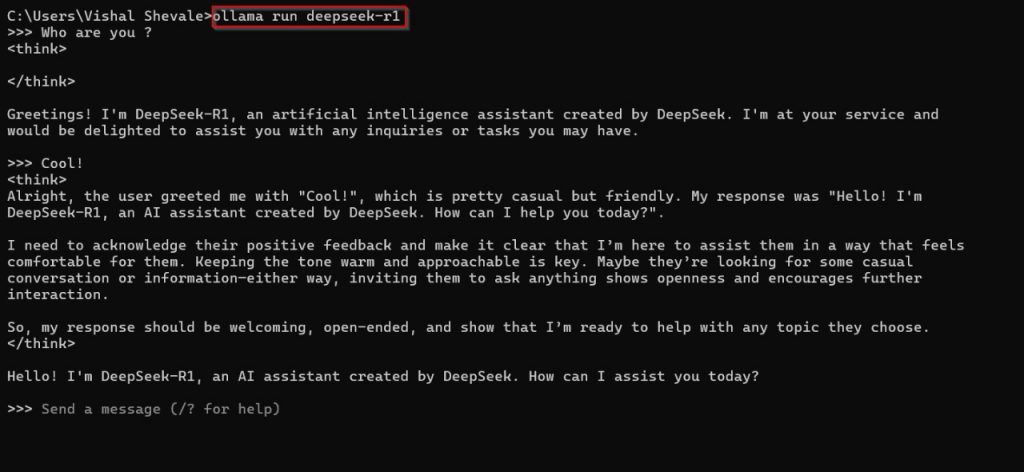

Step 2: Start the Model

The server will start automatically. If not, run:

Step 3: Interact with DeepSeek R1

With the model running, you can now interact with it in the terminal. Try entering a query:

You will receive a response from the model.

Troubleshooting Common Issues

1. Ollama Not Found

Issue: Command Ollama not recognized.

Solution: Restart your terminal and verify the installation by running:

2. Model Download Fails

Issue: Slow download or errors when pulling DeepSeek R1.

Solution:

- Check your internet connection.

- Use a VPN if your region has restrictions.

- Retry the command after some time.

Conclusion

Running DeepSeek R1 locally with Ollama offers privacy, faster processing, and offline accessibility. By following this guide, you have successfully:

✅ Installed Ollama on your system.

✅ Downloaded and set up DeepSeek R1 locally.

✅ Run and interact with the model via Terminal commands.

For further customization, explore Ollama’s documentation and fine-tune DeepSeek R1 for specific applications.

Also Read:

Frequently Asked Questions

1. how much RAM and storage are required to run DeepSeek-R1 locally?

To run the DeepSeek-R1 model locally, a minimum of 16GB of RAM and approximately 20GB of free storage space on an SSD are required. For larger DeepSeek models, additional RAM, increased storage, and potentially a dedicated GPU may be necessary.

2. How do I fix the “command not found” error for DeepSeek R1?

Ensure Ollama is installed correctly by running ollama --version. Restart your terminal and verify DeepSeek R1 exists using ollama list. Reinstall Ollama if the issue persists.

3. Can I fine-tune DeepSeek R1 locally?

Yes, DeepSeek R1 can be fine-tuned on local datasets, but it requires high-end GPU resources. Advanced knowledge of model training is recommended for customization.

4. How do I uninstall DeepSeek R1 from my system?

Run ollama rm deepseek-r1 to remove the model. To uninstall Ollama completely, follow the official Ollama removal guide for your OS.

5. Does DeepSeek R1 support multiple languages?

DeepSeek R1 primarily supports English but can generate responses in other languages with varying accuracy. Performance depends on the training data.

6. Can I integrate DeepSeek R1 into my applications?

Yes, DeepSeek R1 can be integrated into applications using the Ollama API. Check the official documentation for API commands and implementation steps.