Traditional machine learning (ML) typically involves training models on large labeled datasets to extract patterns and evaluate model performance on unseen samples. However, this method requires a substantial amount of labeled training data, making it challenging to apply artificial intelligence (AI) in industries like healthcare, retail, and manufacturing where data is limited.

This is where N-shot learning paradigms come into play, offering solutions to these challenges. In this article, we will delve into:

- Types of N-shot learning paradigms

- Different frameworks and approaches

- Applications

- Challenges and Future Research

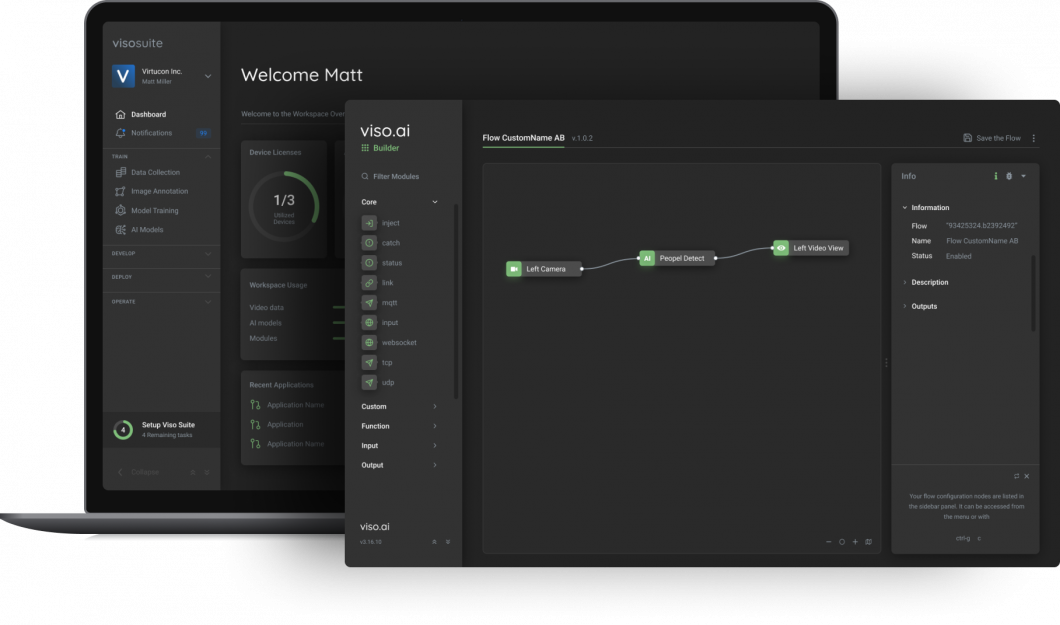

About us: Viso.ai offers an end-to-end computer vision solution – Viso Suite. Our software enables leading organizations to implement deep learning models efficiently for various downstream tasks. Request a demo here.

Types of N-Shot Learnings

N-shot learning overcomes the challenge of training deep learning and computer vision models with limited labeled data. These techniques enable scalable and computationally efficient AI model development by building large models with multiple parameters to capture general data patterns from a few samples.

N-shot learning models can also label data samples with unknown classes and feed the new dataset to supervised learning algorithms for enhanced training. The AI community categorizes N-shot approaches into few-shot, one-shot, and zero-shot learning. Let’s explore each in more detail.

Few-Shot Learning

Few-shot learning (FSL) defines an N-way K-shot problem that aims to train a model on N classes with K samples. For example, a 2-way 3-shot problem would have two image classes, each with three examples.

Support set S, derived from N * K dataset, contains samples for classification, while query set Q is used for training tasks, known as episodes. Each episode consists of support and query sets for model training.

Once training is complete, the model is validated on test tasks with different classes and samples from those used in training.

Single-Shot Learning

Single or one-shot learning (OSL) is a specific case of few-shot learning where the support and query sets contain a single example per class for training. For instance, in face recognition, an OSL model classifies a candidate’s face based on a single reference image.

Zero-Shot Learning

Zero-shot learning (ZSL) aims to classify data samples with zero training examples by training the model using a similar dataset of labeled classes and auxiliary information, such as text descriptions. This helps the model learn general patterns and relationships.

For example, a ZSL model trained on land animal images and descriptions can classify marine animals using the learned patterns.

Few-Shot Learning Approaches

Few-shot learning approaches typically involve meta-learning, where the objective is to teach a model how to learn by classifying different samples in various training tasks.

Meta-learning includes data-based and parameter-level approaches, with algorithms like Model Agnostic Meta-Learning (MAML), Prototypical Networks, and Relation Networks being used to solve the FSL problem.

Single-Shot Learning Approaches

Single-shot techniques like Matching Networks, Siamese Neural Networks, and Memory-Augmented Neural Networks are used for one-shot learning tasks. These approaches help in tasks like image retrieval, action recognition, text classification, and face recognition.

Zero-Shot Learning Approaches

Zero-shot learning approaches include embedding-based and generative-based methods. Embedding-based approaches use feature extractors to convert data into embeddings for classification, while generative-based methods like GANs and VAEs generate feature vectors for known and unknown classes to achieve balanced predictive performance.

N-Shot Learning Benchmarks

N-shot learning models are evaluated on benchmarks like MNIST, CUB-200-2011, and ImageNet using metrics like F1-score, top-1 accuracy, and mean average precision (mAP) to assess classification performance.

The state-of-the-art (SOTA) for one-shot learning is the Siamese Network with a 97.5 accuracy score on the MNIST dataset, while the CLIP model for zero-shot learning achieves a 64.3% accuracy on the ImageNet dataset.

N-Shot Learning Applications

N-shot learning has various applications across industries:

- Medical Image Analysis

- Visual-Question Answering (VQA)

- Autonomous Driving

- Image Retrieval and Action Recognition

- Text Classification

- Face Recognition

Learning Challenges

Challenges in N-shot learning include hubness, overfitting, bias, computational power, and semantic loss. Addressing these challenges requires innovative research and development to enhance model performance.

Latest Research Trends

Recent research trends in N-shot learning involve integrating multimodal data, using Siamese neural nets for malware detection, and creating prompts for code retrieval. These advancements aim to improve model accuracy and efficiency in various applications.

N-Shot Learning – Key Takeaways

Key points to remember about N-shot learning:

- N-shot learning types include few-shot, one-shot, and zero-shot paradigms.

- N-shot learning approaches involve various algorithms like MAML, Prototypical Networks, Siamese Networks, and more.

- Challenges in N-shot learning include overfitting, bias, computational power, and semantic loss.

For more information on computer vision, check out our blog on Getting Started with Computer Vision.

To simplify your CV workflows, request a demo of Viso Suite, our comprehensive computer vision platform.