In today’s era of data-driven technology, edge computing and cloud computing are two buzzwords that are frequently heard together. While both technologies have their unique features and advantages, there seems to be a lot of confusion around their relationship. Some people wonder whether they are competitors or complementary technologies, while others want to know how they can work together to optimize their business operations. In this article, we’ll explore exactly what edge computing and cloud computing are, how they differ, and what their relationship is.

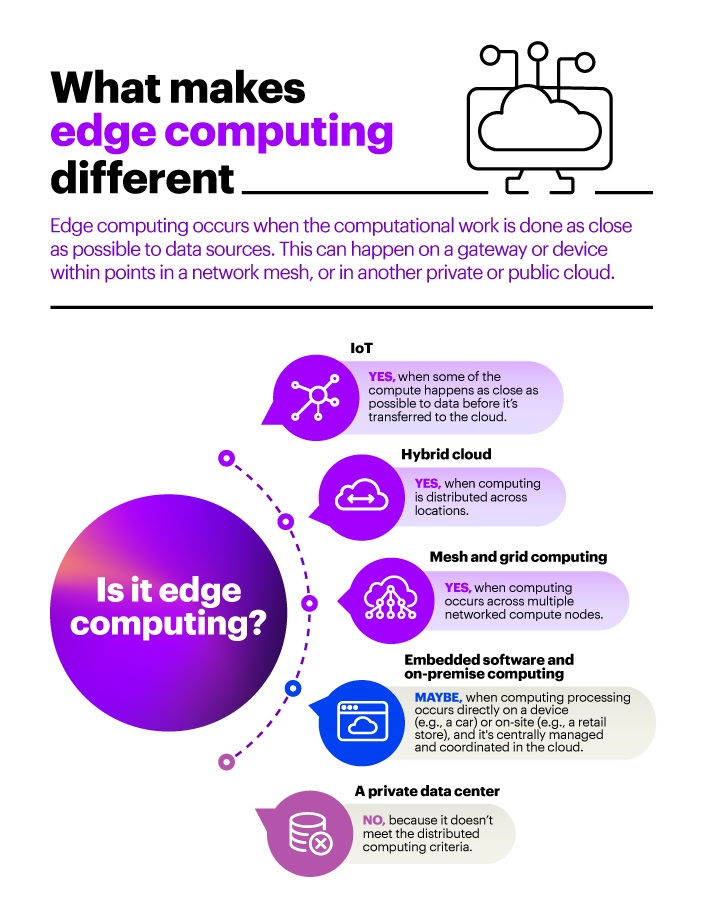

Edge computing refers to the practice of processing data at the edge of the network, closer to where the data is generated. It allows businesses to analyze and respond to data in real-time, leading to faster insights and better decision-making. On the other hand, cloud computing refers to the delivery of computing services over the internet, allowing users to access data and applications from anywhere at any time. While edge computing focuses on processing data locally, cloud computing focuses on storing and managing data in the cloud. Despite their differences, both technologies share a common goal of optimizing the use of data to drive business success. In the next paragraphs, we’ll dive deeper into their individual features and how they work together.

Edge Computing and Cloud Computing

Edge computing and cloud computing are two of the most popular technology trends in today’s digital world. Edge computing is the process of processing data closer to the source of the data, instead of sending it to the cloud for processing. Cloud computing is the process of storing and accessing data over the internet. Both of these technologies are used to improve the performance and efficiency of data processing, but the relationship between them is more complex than it may seem.

What is Edge Computing?

Edge computing is a distributed computing architecture that processes and stores data at the edge of the network, such as on the user’s device or on a local server. It is a way to reduce the need for data to be transferred to a central cloud for processing. Edge computing can help to reduce latency, increase security and privacy, and improve overall performance.

Edge computing is a relatively new concept, but it has already been used in various industries. For example, edge computing is used in the field of artificial intelligence to process images and videos quickly, without having to transfer the data to the cloud. Edge computing is also used in the Internet of Things (IoT), as it can provide real-time data processing and analysis.

What is Cloud Computing?

Cloud computing is the process of storing and accessing data over the internet. It uses a network of remote servers hosted on the cloud to store, manage, and process data, rather than using local servers or personal computers. Cloud computing is a popular option for companies and individuals who need to store large amounts of data or process complex tasks.

Cloud computing is beneficial because it can be used flexibly, from anywhere in the world, and it is cost-effective. It also offers scalability, allowing businesses to quickly increase or decrease the amount of storage or processing power they need. Additionally, cloud computing can be used for real-time analytics, allowing businesses to make decisions quickly and accurately.

Relationship Between Edge Computing and Cloud Computing

Edge computing and cloud computing are complementary technologies. Edge computing is used to process data more quickly, while cloud computing is used to store and access data. Edge computing is used to process data at the edge of the network, while cloud computing is used to store data in a remote location.

Edge computing and cloud computing are used together to provide a comprehensive data processing and storage solution. Edge computing can process data quickly, while cloud computing can store large amounts of data and provide scalability.

By combining edge computing and cloud computing, businesses can take advantage of real-time analytics and data processing, while also having the flexibility and scalability of the cloud. Additionally, edge computing can help to reduce latency, making it possible for businesses to access data quickly and accurately.

Frequently Asked Questions

Edge computing is a type of distributed computing that brings computing and data storage closer to the location where it is needed. Cloud computing, on the other hand, is a type of distributed computing that makes use of remote servers located in a data center or cloud to store and process data. Both technologies are used to provide faster access to data and computing resources.

What is the difference between edge computing and cloud computing?

Edge computing and cloud computing have some differences between them. Edge computing focuses on processing data closer to the device that generated it, while cloud computing is focused on providing a remote server or service accessed over the internet. Edge computing is used to process data at the edge of the network, such as at the point of collection or in a local network, while cloud computing is used to store and process data remotely. Edge computing is typically used for low-latency applications such as Internet of Things (IoT) applications, while cloud computing is typically used for high-latency applications such as web and mobile applications.

What are the benefits of edge computing compared to cloud computing?

Edge computing has several benefits compared to cloud computing. Edge computing can reduce latency, as data does not have to be sent back and forth from the cloud. It can also improve network efficiency, as it reduces the amount of data that needs to be sent over the network. Edge computing can also increase security, as data is stored and processed locally, which reduces the risk of data breaches. Additionally, edge computing can reduce costs, as it eliminates the need for costly cloud infrastructure.

What applications are best suited for edge computing?

Edge computing is well-suited for applications that require low latency and high throughput, such as Internet of Things (IoT) applications, autonomous vehicles, smart cities, and augmented reality/virtual reality applications. Edge computing is also useful for applications that require high levels of security, as data can be stored and processed locally.

What are some of the challenges of edge computing?

Some of the challenges of edge computing include scalability, manageability, and security. Edge computing systems can be difficult to scale as the number of devices and data sources increase. Additionally, edge computing systems can be difficult to manage, as they require a distributed system of devices, sensors, and gateways. Additionally, edge computing systems can be vulnerable to security threats, such as malicious code, malware, and distributed denial of service (DDoS) attacks.

How does edge computing complement cloud computing?

Edge computing can be used to complement cloud computing, as it can be used to reduce latency and improve network efficiency. Edge computing can be used to process data at the edge of the network, such as at the point of collection or in a local network, before sending it to the cloud. This reduces the amount of data that needs to be sent over the network, which can improve network efficiency and reduce latency. Edge computing can also be used to process data locally, which can improve performance and reduce the amount of data that needs to be sent to the cloud. Additionally, edge computing can be used to protect data, as it reduces the risk of data breaches and malicious activities.

In conclusion, the relationship between edge computing and cloud computing is symbiotic. While cloud computing provides centralized management and storage of data, edge computing allows for faster processing and analysis of data at the edge of the network. Edge computing also enables the deployment of real-time applications, such as autonomous vehicles and manufacturing, that require low latency and high bandwidth. On the other hand, cloud computing provides the necessary scalability and flexibility for edge devices to access and interact with data in real-time.

As technology continues to evolve, the relationship between edge computing and cloud computing will become increasingly important. Together, they will enable the development of new and innovative applications that will transform the way we live and work. As such, it is crucial for organizations to understand the benefits and limitations of both technologies and implement a comprehensive strategy that leverages both edge and cloud computing to achieve their business objectives. By doing so, they will be able to stay ahead of the competition and provide a seamless and engaging experience for their customers.