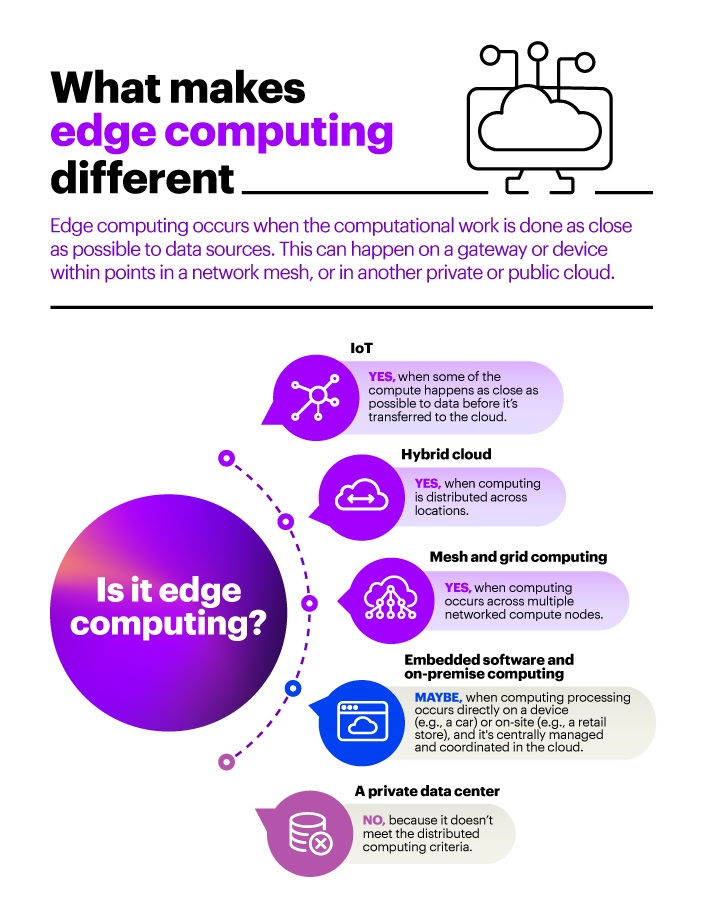

In today’s world, technology is advancing at an unprecedented pace. With the advent of the Internet of Things (IoT) and the increasing amount of data generated, traditional cloud computing systems are struggling to keep up. This is where edge computing comes in as a game-changer. Edge computing is a distributed computing model that brings computation and data storage closer to the location where it is needed. This approach reduces latency and improves the overall performance of the system.

So, how does edge computing reduce latency? To understand this, we need to first understand what latency is. Latency is the time delay between the request for data and the response received. In traditional cloud computing systems, data is sent to a central server for processing and storage, resulting in latency due to the distance that the data has to travel. With edge computing, however, data processing and storage are done at the edge of the network, closer to the source of the data. This reduces the distance that data has to travel, resulting in significantly lower latency. Additionally, edge computing enables real-time processing of data, making it ideal for applications that require low latency, such as autonomous vehicles or virtual reality.

Edge computing reduces latency by processing data close to the source. This eliminates the need for data to travel over long distances, resulting in faster response times and a better user experience. Edge computing also enables data to be processed at the device level, bypassing the need for a cloud server. This reduces the amount of data that needs to be transmitted, resulting in less latency.

How Does Edge Computing Reduce Latency?

Edge computing is a type of computing that moves computing resources and applications as close as possible to the user or the data source. By doing this, it reduces the number of hops and therefore reduces latency. It allows businesses to move computing resources from the core of the data center to the edge of the network, which reduces the need for communication with the cloud and therefore reduces latency.

What is Edge Computing?

Edge computing is the process of taking computing power out of the cloud and bringing it closer to the user. Edge computing enables businesses to move their computing resources from the core of the data center to the edge of the network. This reduces the need for communication with the cloud, which in turn reduces latency.

Edge computing is an important part of the Internet of Things (IoT). IoT devices, such as sensors and cameras, can collect and process data much faster when they are connected to an edge computing device. This reduces latency and allows businesses to respond quickly to changes in their environment.

How Does Edge Computing Reduce Latency?

Edge computing reduces latency by reducing the number of hops between the user and the data source. By moving the computing resources closer to the user, it reduces the need for communication with the cloud, which in turn reduces latency. By reducing latency, edge computing allows businesses to respond quicker to changes in their environment.

Edge computing also reduces the amount of data that needs to be sent to and from the cloud, which further reduces latency. By reducing the amount of data that needs to be transferred, edge computing makes it easier for businesses to respond quickly to changes in their environment.

Benefits of Edge Computing

Edge computing provides businesses with a number of benefits, including reduced latency, increased security, and improved scalability. By reducing latency, edge computing allows businesses to respond quicker to changes in their environment. This can lead to improved customer service and better decision-making.

Edge computing also provides businesses with increased security. By moving computing resources closer to the user, businesses can protect their data and reduce the risk of data breaches. This can lead to increased customer trust and improved customer satisfaction.

Frequently Asked Questions About Edge Computing and Latency Reduction

Edge computing is a distributed computing model that processes data and applications closer to the edge of the network. It reduces latency by optimizing the application’s access to the data and resources it needs, resulting in faster response times and improved performance.

What Is Edge Computing?

Edge computing is a distributed computing model that brings computing resources closer to the edge of the network. By processing data and applications closer to the edge of the network, edge computing reduces latency, or the time it takes for a request to be processed and a response to be received. This reduces the need for data to travel over long distances, resulting in faster response times and improved performance.

How Does Edge Computing Reduce Latency?

Edge computing reduces latency by optimizing the application’s access to the data and resources it needs. By processing data closer to the source, it eliminates the need to send requests over long distances, resulting in faster response times. Additionally, edge computing facilitates real-time analysis, which helps to reduce latency by enabling quick decision-making and fast responses.

What Benefits Does Edge Computing Provide?

Edge computing provides a number of benefits, including improved performance, better scalability, reduced latency, and lower costs. By optimizing the application’s access to data and resources, edge computing improves performance by reducing the amount of time it takes to process a request and receive a response. Additionally, edge computing is more scalable than traditional cloud computing models, as it can be deployed in a variety of locations. Finally, edge computing reduces costs by eliminating the need to send data over long distances.

What Are the Challenges of Edge Computing?

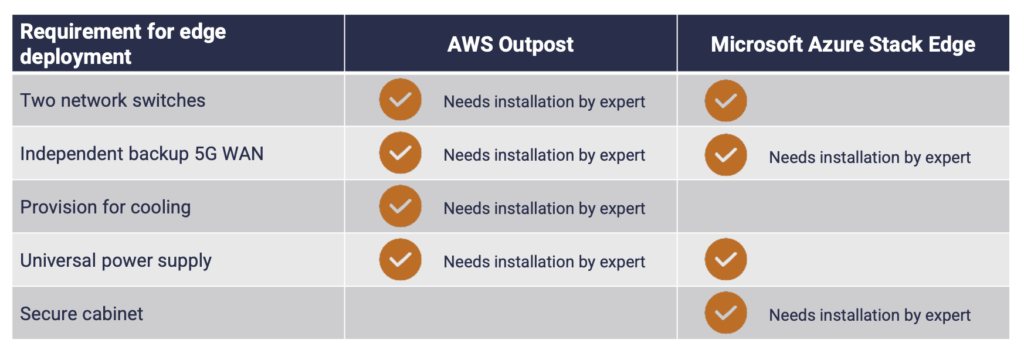

The main challenge of edge computing is ensuring that the data is secure and that the devices are properly managed. Since edge computing devices are typically located in remote locations, it can be difficult to monitor and manage them. Additionally, edge computing devices need to be properly secured, as they are often used for sensitive data. Finally, edge computing can be difficult to set up, as it requires specialized hardware and software.

What Are the Use Cases for Edge Computing?

Edge computing can be used in a variety of applications, including the Internet of Things (IoT), connected cars, robotics, and smart cities. In the IoT, edge computing is used to process and analyze data from connected devices in real-time. Additionally, edge computing is used in connected cars to process data from sensors in order to optimize driving performance. Finally, edge computing is used in robotics and smart cities to process data and make decisions in real-time.

In conclusion, edge computing is a game-changer when it comes to reducing latency. By bringing processing power closer to the source of data, edge computing eliminates the need for data to travel long distances to reach centralized servers. This results in faster processing times, reduced network congestion, and improved overall performance. With the rise of the Internet of Things (IoT) and the increasing demand for real-time data processing, edge computing is becoming more important than ever before.

In addition to reducing latency, edge computing also offers several other benefits, including increased security, improved reliability, and reduced costs. By processing data locally, edge computing reduces the need for expensive hardware and network infrastructure, which can significantly reduce costs for businesses. Furthermore, edge computing can provide a more secure environment for data processing, as it reduces the risk of data breaches and other security threats. Overall, edge computing is a powerful technology that is transforming the way we think about data processing and is poised to have a significant impact on the future of technology.