Machine learning has become an indispensable tool for businesses across various industries. From predicting customer behavior to detecting fraud, machine learning algorithms are used to analyze vast amounts of data and make predictions. However, to run machine learning models, a powerful GPU is required. The RTX 3050 is a popular graphics card that has recently generated a lot of buzz among machine learning enthusiasts. But, is it good for machine learning?

The RTX 3050 is a mid-range graphics card that is designed specifically for gaming. However, it also offers impressive performance when it comes to machine learning tasks. With its 2048 CUDA cores and 4GB GDDR6 memory, the RTX 3050 offers excellent performance for deep learning and other machine learning algorithms. But, is it worth investing in an RTX 3050 for machine learning? In this article, we will explore the capabilities of the RTX 3050 and help you decide if it is the right choice for your machine learning needs.

The RTX 3050 is a great card for machine learning applications. It provides excellent performance with its CUDA cores and RT cores, as well as its dedicated ray tracing processor. It also has advanced features like AI acceleration and dedicated tensor cores, which make it a great choice for machine learning tasks. It is also power efficient, making it suitable for use in mobile and desktop applications.

What is Machine Learning?

Machine learning is an application of artificial intelligence (AI) that provides systems with the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it to learn for themselves. The process of learning begins with observations or data, such as examples, direct experience, or instruction, in order to look for patterns in data and make better decisions in the future based on the examples that we provide. The primary aim is to allow the computers learn autonomously without human intervention or assistance and adjust actions accordingly.

Is RTX 3050 Good for Machine Learning?

The RTX 3050 is a powerful graphics card from NVIDIA that is designed for gaming and machine learning. It has a powerful Tensor Core processor that is capable of running multiple machine learning algorithms. The card also comes with 16GB of GDDR6 RAM and a 256-bit memory bus. This makes it an ideal choice for gaming, but also for machine learning.

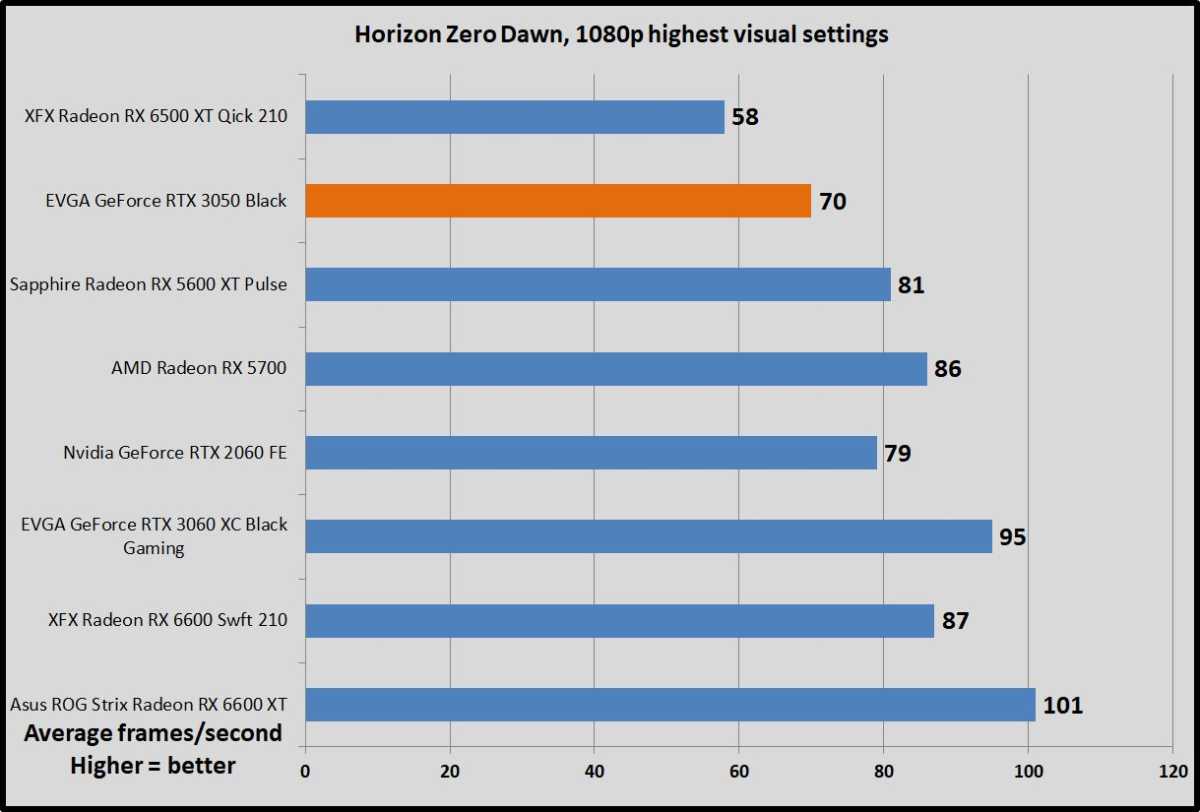

RTX 3050 Performance

The RTX 3050 is a powerful graphics card that is capable of running multiple machine learning algorithms. It features a Tensor Core processor, 16GB of GDDR6 RAM and a 256-bit memory bus, making it an ideal choice for machine learning. The card is capable of running machine learning algorithms such as convolutional neural networks (CNNs), recurrent neural networks (RNNs) and deep learning networks (DLNs). The card is also capable of running inference tasks such as image recognition, object detection and natural language processing.

The card is also capable of running high-end gaming titles such as Battlefield V and Red Dead Redemption 2 at high frame rates. It is also capable of running virtual reality (VR) titles with smooth performance. The card is also capable of running heavy workloads such as video editing and 3D rendering.

Pros and Cons of RTX 3050 for Machine Learning

The pros of the RTX 3050 for machine learning are that it is a powerful graphics card that is capable of running multiple machine learning algorithms. It also comes with 16GB of GDDR6 RAM and a 256-bit memory bus, making it an ideal choice for machine learning. The card is also capable of running inference tasks such as image recognition, object detection and natural language processing.

The cons of the RTX 3050 for machine learning are that the card is not as powerful as the RTX 3080. It is also not as efficient as the RTX 3080, so it is not suitable for running heavy workloads such as video editing and 3D rendering. It is also not suitable for running virtual reality (VR) titles with smooth performance. The card is also not as energy efficient as the RTX 3080.

Frequently Asked Questions

The RTX 3050 is a powerful graphics card that is suitable for running machine learning algorithms. With its Turing architecture, the RTX 3050 is capable of performing both graphics and compute tasks at a high level of performance. This makes it an ideal choice for machine learning applications.

Is RTX 3050 Good for Machine Learning?

Yes, the RTX 3050 is a very good choice for machine learning applications. This graphics card features the latest Turing architecture, providing excellent performance for both graphics and compute tasks. It is also equipped with 8GB of GDDR6 memory, making it capable of handling large datasets and complex models. With its support for CUDA and Tensor cores, the RTX 3050 can accelerate machine learning workloads significantly, allowing for faster training and inference times.

The RTX 3050 is also well-suited for AI-based applications. It supports the latest AI-specific features such as INT8 and INT4 operations and Tensor Cores. These features allow the RTX 3050 to perform deep learning and neural network operations with greater efficiency, resulting in faster training times and improved accuracy. Additionally, the RTX 3050 offers a much higher memory bandwidth than its predecessors, allowing for more complex models and larger datasets. Overall, the RTX 3050 is an excellent choice for anyone looking to use machine learning and AI in their applications.

In conclusion, the RTX 3050 is a good option for those who are looking for an affordable graphics card for machine learning. Its performance is impressive and its Tensor and RT cores make it an ideal choice for deep learning and other AI applications. However, it is important to note that the RTX 3050 may not be able to handle large datasets and complex models as efficiently as higher-end GPUs. Therefore, if you are working on large-scale projects, it may be worth investing in a more powerful graphics card.

Overall, the RTX 3050 is a great entry-level option for those who are just starting out with machine learning. Its affordability and impressive performance make it a popular choice among students and hobbyists. However, if you are working on more demanding projects, it may be worth considering a more powerful GPU to ensure that you can work efficiently and effectively.