Introduction

The era has arrived where your phone or computer can understand the objects of an image, thanks to technologies like YOLO and SAM.

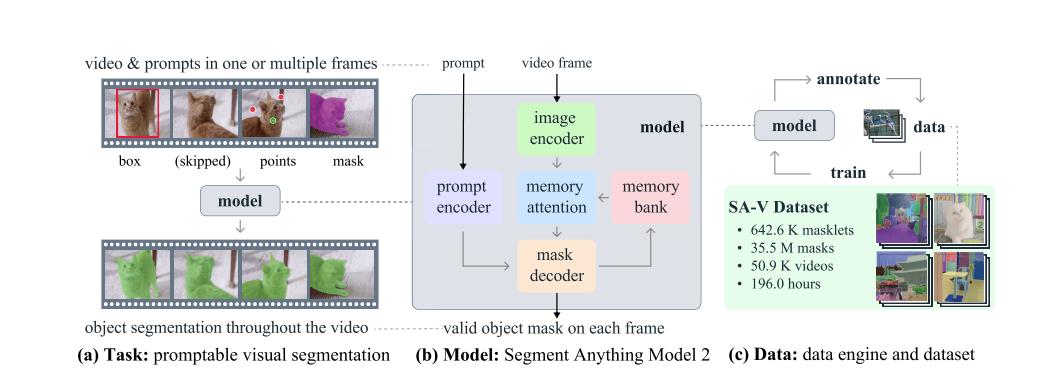

Meta’s Segment Anything Model (SAM) can instantly identify objects in images and separate them without needing to be trained on specific images. It’s like a digital magician, able to understand each object in an image with just a wave of its virtual wand. After the successful release of llama 3.1, Meta announced SAM 2 on July 29th, a unified model for real-time object segmentation in images and videos, which has achieved state-of-the-art performance.

SAM 2 offers numerous real-world applications. For instance, its outputs can be integrated with generative video models to create innovative video effects and unlock new creative possibilities. Additionally, SAM 2 can enhance visual data annotation tools, speeding up the development of more advanced computer vision systems.

What is Image Segmentation in SAM?

Segment Anything (SAM) introduces an image segmentation task where a segmentation mask is generated from an input prompt, such as a bounding box or point indicating the object of interest. Trained on the SA-1B dataset, SAM supports zero-shot segmentation with flexible prompting, making it suitable for various applications. Recent advancements have improved SAM’s quality and efficiency. HQ-SAM enhances output quality using a High-Quality output token and training on fine-grained masks. Efforts to increase efficiency for broader real-world use include EfficientSAM, MobileSAM, and FastSAM. SAM’s success has led to its application in fields like medical imaging, remote sensing, motion segmentation, and camouflaged object detection.

Dataset Used

Many datasets have been developed to support the video object segmentation (VOS) task…

How to install SAM 2?

Bring this project to life

To start the installation, open up a Paperspace Notebook and start the GPU machine of your choice.

# Clone the repo

!git clone https://github.com/facebookresearch/segment-anything-2.git

# Move to the folder

cd segment-anything-2

# Install the necessary requirements

!pip install -e .

How to use SAM 2?

Summary

- SAM 2 Overview: SAM 2 builds on SAM by extending its capabilities from images to videos…

SAM 2 Limitations

- Performance and Improvement: While SAM 2 performs well in segmenting objects in images and short videos…

References